Graphic:

Excerpt:

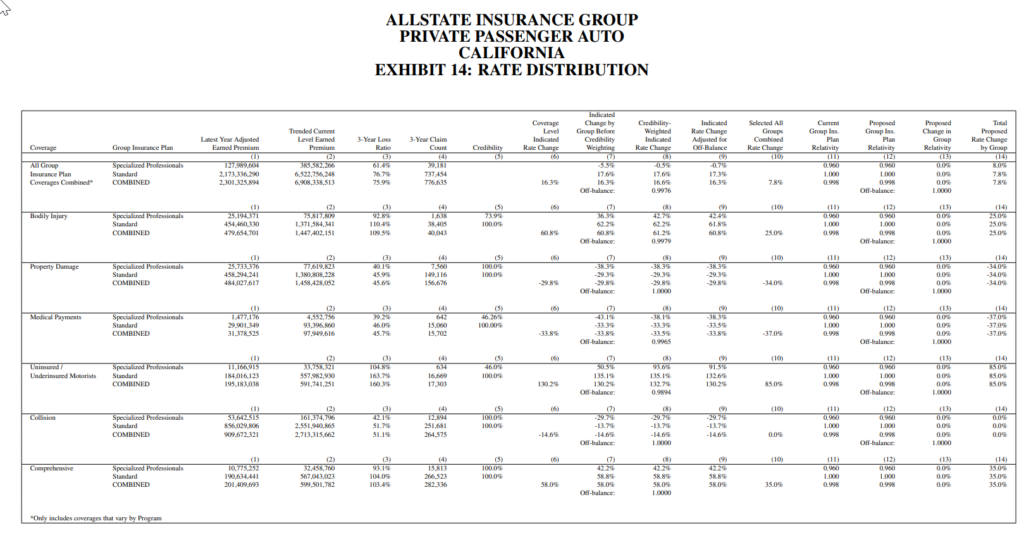

Insurance Commissioner Ricardo Lara should reject Allstate’s proposed $165 million auto insurance rate hike and its two-tiered job- and education-based discriminatory rating system, wrote Consumer Watchdog in a letter sent to the Commissioner today. The group called on the Commissioner to adopt regulations to require all insurance companies industrywide to rate Californians fairly, regardless of their job or education levels, as he promised to do nearly three years ago. Additionally, the group urged the Commissioner to notice a public hearing to determine the additional amounts Allstate owes its customers for premium overcharges during the COVID-19 pandemic, when most Californians were driving less.

Overall, the rate hike will impact over 900,000 Allstate policyholders, who face an average $167 annual premium increase.

Under Allstate’s proposed job-based rating plan, low-income workers such as custodians, construction workers, and grocery clerks will pay higher premiums than drivers in the company’s preferred “professional” occupations, including engineers with a college degree, who get an arbitrary 4% rate reduction.

Author(s): Consumer Watchdog

Publication Date: 22 Sept 2022

Publication Site: PRNewswire