Link: https://www.technologyreview.com/2021/04/01/1021619/ai-data-errors-warp-machine-learning-progress/

Paper link: https://arxiv.org/pdf/2103.14749.pdf

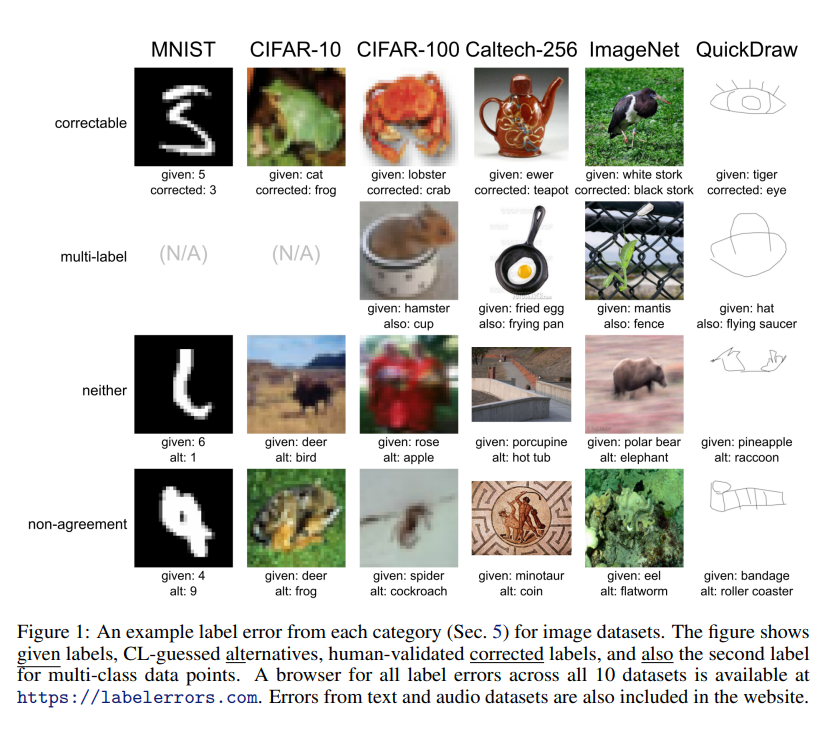

Graphic:

Excerpt:

Yes, but: In recent years, studies have found that these data sets can contain serious flaws. ImageNet, for example, contains racist and sexist labels as well as photos of people’s faces obtained without consent. The latest study now looks at another problem: many of the labels are just flat-out wrong. A mushroom is labeled a spoon, a frog is labeled a cat, and a high note from Ariana Grande is labeled a whistle. The ImageNet test set has an estimated label error rate of 5.8%. Meanwhile, the test set for QuickDraw, a compilation of hand drawings, has an estimated error rate of 10.1%.

Author(s): Karen Hao

Publication Date: 1 April 2021

Publication Site: MIT Tech Review